intermezzOS

An operating system for learning

Preface

This book describes the intermezzOS project. intermezzOS is a hobby operating system, specifically targeted at showing beginners how to get into operating systems development. Rather than describe some sort of final OS, it instead proceeds in a tutorial-like fashion, allowing you to implement intermezzOS yourself, alongside the book.

The book assumes that you have programmed in some language before, but not any particular one. In fact, people who have not done low-level programming before are a specific target of this book; I’ll be explaining a lot of things that other resources will just assume that you know.

intermezzOS is implemented in Rust, and some assembly code. We’ll try to explain Rust along the way, but may refer you to its documentation when things get tricky. This book isn’t really about learning Rust, but you might accidentally along the way.

You can find all of this stuff on GitHub.

This book is in the book repository, the kernel is in kernel, and the

website is there too. Feel free to open issues on the RFCs

repo if you want to discuss things

in a general sense, and send bug reports and PRs to the appropriate repos

if you’d like to help with a particular component.

The Story

A long time ago, in college, my friends and I were working on a hobby operating system, XOmB. It was... tough. Frankly, while I learned a lot, I was a pretty minor contributor. I got frustrated too easily. One day, I found Ruby, and I was pretty much done with low-level programming. I had done it most of my life, and I was bored. Bored, and sick of dealing with core dumps.

Those details aren’t that important. What is important is that over the years, I’ve always wanted to get back into this stuff. But the problem is this: there are a lot of people who do hobby operating system work, but... I don’t like their attitudes.

You see, a lot of people see low-level programming as some kind of superior, only-for-the-smartest kind of thing. They have a puritanical world-view: “I suffered to learn this, so you too must suffer to build character.” I think that’s short sighted. Low level programming is difficult to get into, but that says more about the teachers’ faults than the students’.

Anyway, as my professional life has moved back towards the low level, I’ve been thinking about this topic a lot again. That’s when I found an awesome link: Writing an OS in Rust by Philipp Oppermann. I cannot speak enough about how awesome Phil’s tutorial is; it single-handedly inspired me to get back into operating systems.

The big difference with Phil’s tutorial is that it doesn’t treat you as being stupid for not knowing ‘the basics’. It doesn’t say “spend hours debugging this thing, because I did.” It doesn’t insult you for being new. It just explains the basics of a kernel.

It’s amazing how much a little bit of a framing can completely change the way you see something. When the examples I found were all about how you have to be an amazing rockstar ninja and we won’t give you all the code because you suck if you can’t figure it out, I hated this stuff. When it was kind, understanding, and helpful, I couldn’t get enough.

Once I got to a certain part in Phil’s tutorial, I started implementing stuff myself. A lot of the initial code here is going to be similar to Phil’s. But I’m going to write about it anyway. There’s a good reason for that:

Writing is nature’s way of showing us how sloppy our thinking is.

- Leslie Lamport

By re-explaining things in my own words, I hope to understand it even better. This is just a perpetual theme with me: I like teaching because it helps me learn. I like writing because it helps me understand.

The first section of the book is going to be clear about where we’re following Phil, and where we break off and go into our own little world. After the start, things will end up diverging.

Furthermore, I will not commit to any kind of schedule for this project. It’s going to be in my spare time, and I’m learning a lot of this as I go, too.

The Name

The nomad has a territory; he follows customary paths; he goes from one point to another; he is not ignorant of points (water points, dwelling points, assembly points, etc.). But the question is what in nomad life is a principle and what is only a consequence. To begin with, although the points determine paths, they are strictly subordinated to the paths they determine, the reverse happens with the sedentary. The water point is reached only in order to be left behind; every point is a relay and exists only as a relay. A path is always between two points, but the in-between has taken on all the consistency and enjoys both an autonomy and a direction of its own. The life of the nomad is the intermezzo.

Deleuze and Guattari, “A Thousand Plateaus”, p380

If you’re not into particular kinds of philosophy, this quote won’t mean a lot. Let’s look at the dictionary definition:

An intermezzo, in the most general sense, is a composition which fits between other musical or dramatic entities, such as acts of a play or movements of a larger musical work.

I want this project to be about learning. Learning is often referred to as a journey. You start off in ignorance and end in knowledge. In other words, ‘learning’ is that part in the middle, the in-between state.

The tricky thing about learning is, you never stop learning. Once you learn something, there’s something new to learn, and you’re on a journey again.

If you want to learn a lot, then you’ll find yourself perpetually in the middle.

There is another sense by which this name makes sense: as we’ll learn in the beginning of the book, operating systems are largely about abstractions. And abstractions are themselves ‘in the middle’, between what they’re abstracting and who they are abstracting it for.

Principles

Here are the guiding principles of intermezzOS:

- We’re all actual people. Please treat each other as such.

- We’re all here to learn. Let’s help each other learn, rather than being some kind of vanguard of knowledge.

- The only thing that matters about your language background is the amount you may have to learn.

- Everything must be documented, or it’s not done.

And of course, everything related to this project is under the Code of Conduct.

Background

Before we get going, we should probably have some idea of where we’re headed.

What is an OS?

It’s actually kind of difficult to define what an operating system is. There are a lot of different kinds of operating systems, and they all do different kinds of things.

Some things are commonly bundled with operating systems, but are arguably not part of the essence of what makes an OS an OS. For example, many operating systems are often marketed as coming equipped with a web browser or email client. Are web browsers and email clients essential to operating systems? Many would argue the answer is no.

There are some shared goals we can find among all operating systems, however. Let’s try this out as a working definition:

An operating system is a program that provides a platform for other programs. It provides two things to these programs: abstractions and isolation.

This is good enough for now. Let’s consider this a test for inclusion, but not exclusion. In other words, things that fit this definition are operating systems, but things that don’t may or may not be, we don’t quite know.

Creating abstractions

There are many reasons to create a platform for other programs, but a common one for operating systems is to abstract over hardware.

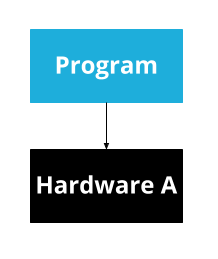

Consider a program, running on some hardware:

This program will need to know exactly about what kind of hardware exists. If you want to run it on a different computer, it will have to know exactly about that computer too. And if you want to write a second program, you’ll have to re-write a bunch of code for interacting with the hardware.

All problems in computer science can be solved by another level of indirection.

- David Wheeler

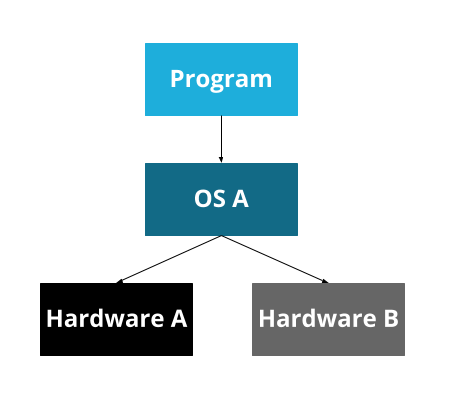

To solve this problem, we can introduce an abstraction:

Now, the operating system can handle the details of the hardware, and provide an API for it. A program can be written for that operating system’s API, and can then run on any hardware that the operating system supports.

At some point, though, we developed many operating systems. Since operating systems are platforms, most people pick one and have only that one on their computer. So now we have a problem that looks the same, but is a bit different: our program is now specific to an OS, rather than specific to a particular bit of hardware.

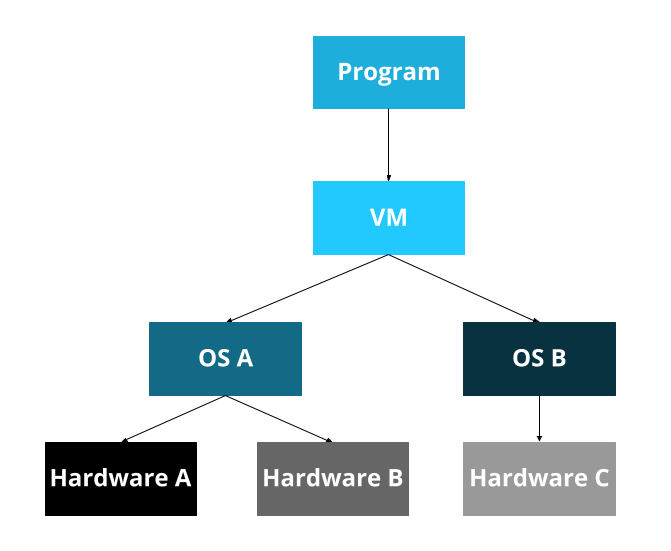

To solve this, some programming languages have a ‘virtual machine.’ This was a big selling point of Java, for example: the Java Virtual Machine. The idea here is that we create a virtual machine on top of the real machine.

Now, you write programs for the Java Virtual Machine, which is then ported to each operating system, which is then ported to all the hardware. Whew!

This, of course, leads to the corollary to the previous maxim:

...except for the problem of too many layers of indirection.

- Kevlin Henney

We now have a pattern:

- I have

A. Ais written explicitly forX...- ... but I want to support

XandY, - so I put abstraction

Bin the middle.

We will see this pattern over and over again. Hence ‘intermezzo’: abstractions are always in the middle.

Isolation

Many of the abstractions provided are, as we discussed, abstractions over hardware. And hardware often has a pretty serious restriction: only one program can access the hardware at a time. So if our operating system is going to be able to run multiple programs, which is a common feature of many operating systems, we’ll also need to make sure that multiple programs cannot access hardware at the same time.

This really applies to more than just hardware though: it also applies to shared resources (e.g. memory). Once we have two programs, it would be ideal to not let them mess with each other. Consider any sort of program that deals with your password: if programs could mess with each other’s memory and code, then a program could trivially steal your password from another program!

This is just one symptom of a general problem. It’s much better to isolate programs from each other, for a number of different reasons. For now, we’ll just consider isolation as one of our important jobs, as OS authors.

Wait a minute...

Here’s a question for you to ponder: if we didn’t provide isolation, isn’t that just a poor abstraction? In other words, if we had an abstraction where we could interact with other things being abstracted... isn’t that just a bad job of doing the abstraction? And in that sense, is the only thing an operating system does abstraction? Is the only thing everything does abstraction?

I don’t have answers for you. If you figure it out, let me know...

What kinds of OS are there?

Okay, so here’s the thing: operating systems are made up of a lot of components. The core component is called a ‘kernel’. The non-kernel bits of an operating system are collectively called a ‘userland’. Typically a kernel has more direct access to the machine than a userland and thus acts somewhat like a super user (with powers that even ‘sudo’ cannot give you). A kernel forms the basis of the abstractions and isolations. So, as OS developers, when we categorize operating systems, we tend to categorize them by what kinds of kernel they have.

By the way...

Although you may be used to hearing the term ‘Linux’ used as a name for an operating system, you may hear some people say, “It’s GNU/Linux, not Linux.” That’s because virtually all Linux distributions today use a Linux kernel + a GNU userland. So the GNU folks are a bit annoyed that the kernel gets all the credit. By the same token, a lot of people say ‘the kernel’ when they mean ‘the Linux kernel.’ This gets an entirely different set of people mad.

At the start, our ‘operating system’ will be just the kernel, and so we’ll tend to focus on kernels for the first part of our journey.

The way that we categorize different kernels largely comes down to “what is in the kernel and what is in userspace.” Upon reading this, you might then think the easiest kind of kernel to write is the smallest, where everything is in userspace. After all, smaller should be easier, right? Well... that’s not actually true. Or at least, it’s not clear that it’s true.

Monolithic kernels

First, we have ‘monolithic kernels’. ‘Mono’ meaning ‘one’. One big ol’ kernel. Most real-world kernels are monolithic kernels, or at least, pretend to be. Don’t worry about it. Linux, for example, is a monolithic kernel.

This means that monolithic kernels are kind of ‘the default’. Other kernels usually define themselves by solving some kind of problem that monolithic kernels have.

If a monolithic kernel were a web application, it would be a big ol’ Rails application. One repository. A million subdirectories. It may be a big ball of mud, but it pays the bills.

Microkernels

Microkernels are, well, micro. Smaller. A lot of the functionality that’s typically in the kernel is in userspace instead. This is a good idea in theory, but historically, microkernels have had issues. All that communication has overhead, which makes them slower.

Mach, the kernel that Mac OS X uses, is a microkernel. Well, sort of. It ended up being one, but Mac OS X uses a version of Mach from before that work was done... so it’s a bit blurry.

If a microkernel were a web application, it would be a microservice. And a bunch of the other stuff that’s in kernel space in a monolithic kernel are other microservices, but in userspace instead. It’s a bit cooler than a single monolithic web app by itself, and the communication is nice for flexibility’s sake, but has some overhead.

Exokernels & Unikernels

These two kinds of operating systems are closely related, but it’s a bit harder to dig into what exactly makes them different. Unikernels have one easy-to-describe feature: they only run one single program at a time. Exokernels are ‘more micro than micro’, but the details aren’t important right now.

The important thing to know here is that there are a lot of other kinds of designs than just monolithic vs. micro. There’s a lot of stuff to learn!

What kind are we making?

So, given all these kinds of operating systems, what kind are we making?

The answer is “it doesn’t even matter at first.” There’s some commonality in almost all of these styles of operating systems, and we have to get all that done before we even make those decisions.

Secondly, we could waste a lot of time trying to design our perfect OS. And then never actually build it. Remember, the goal here is to learn, not to make the best OS that ever existed. So really, what it ends up looking like just doesn’t really matter at all. Most hobby operating system projects die quite young.

Let’s focus on the doing, and less on the categorization, planning, and being. A nice thing about operating systems is that there’s a lot of freedom of direction. To tie it back into the example earlier, Phil’s tutorial starts going into memory-management after getting printing to the screen going. We’ll be going into keyboards first instead. There are, of course, some dependencies, but there’s also a lot of freedom.

What tools will we use?

Before we can make a kernel, we need to figure out the tools we’re going to use. The first question, of course, is what programming language?

In our case, we’re going to use two. The first one is the language that every kernel must use: assembly language.

Assembly

Assembly language gives us direct access to a specific machine. If the basis of computer science is abstraction, the very bottom of the software abstraction layer is assembly. Below it lies only hardware and physics.

There are many kinds of assembly languages each targeted at different ‘instruction set’ architectures (also known as ISA or simply as instruction sets). These instruction sets are the list of commands that a given CPU can understand. For example, if your computer has an Intel Pentium processor of some kind then it understands the x86 instruction set. So if you were to write assembly for another instruction set (say MIPS or ARM), you would not be able to run it on your computer.

This is one of the reasons we'll want to get away from the assembly world as fast as possible. If we want our kernel to work for a bunch of different architectures, any code we end up writing in assembly will need to be duplicated. However, if we use a more high-level language like C, C++ or the language we'll really be using, Rust, we can write the code once and cross-compile to different architectures.

Assembly language looks like this:

; foo.asm

section .data

global _start

_start:

mov rax, 0

loop:

add rax, 1

cmp rax, 10

jne loop

mov rbx, rax

mov rax, 1

int 80h

This is a little program in assembly language. If it looks totally alien to you, don't worry. While we could write our entire kernel in assembly, we'll only be learning as much assembly as we need to not have to use it any more.

When you write assembly language you are actually directly manipulating the individual registers of the CPU and memory inside of RAM and other hardware devices like CD drives or display screens.

By the way...

CPUs are composed of registers each of which can only hold small amounts of data. The amount of data a register can hold dictates what type of CPU the register belongs to. If you didn't know why your machine is classified as either 32 bit or 64 bit it's because the machine's registers can either hold 32 bits of data at a time or 64 bits at a time.

In assembly we can only do very simple things: move data between registers or to/from RAM; perform simple arithmetic like addition, subtraction, multiplication and division; compare values in different registers, and based on these comparisons jump to different points in our code (à la GOTO). Fancy high level concepts like while loops and if statements, let alone garbage collection are nowhere to be found. Even functions as you know them aren't really supported in assembly. Each assembly program is just a bunch of data in registers or in memory and a list of instructions, carried out one after the other.

For instance, in our code above we used the mov instruction several times to

move values into specific registers with weird names like rax and rbx. We

used the cmp instruction to compare the value inside of the rax register

with the number 10. We used the jne instruction to jump to another part of

our code if the numbers we just compared were not equal. Finally we used the int

instruction to trigger a hardware interrupt.

Again, you don't need to fully understand this program at this point. Right now you should just have an impression for how assembly is composed of simple instructions that do very simple things.

When it comes time to write some actual assembly code we'll touch on all this again.

Let's run this little program:

$ nasm -f elf64 foo.asm # assemble into foo.o

$ ld foo.o # link into a.out

$ ./a.out # run it

$ echo $? # print out the exit code

10

$

Don't worry too much about what programs we're using to actually compile (or ‘assemble’ as it's known in the assembly world) our program. We'll be going over each one of these commands and explaining what they are and how to use them.

Rust

We will augment our assembly with code written in Rust. In fact, we will be trying to get to Rust-land as quickly as we possibly can. Rust is a really great programming language, and it’s pretty great for writing operating systems. It has some rough edges, but they’re not too big of a deal.

Rust will allow us to write:

// foo.rs use std::process; fn main() { let mut a = 0; for _ in 0..10 { a = a + 1; } process::exit(a); }

This does the same thing as our assembly code:

$ rustc foo.rs # compile our Rust code to foo

$ ./foo # run it

$ echo $? # print out the exit code

10

$

That Rust code probably looks more like a programming language you’ve used in the past. It’s a lot nicer to write complex things in a higher-level programming language like Rust. That said, virtually all languages are higher-level than assembly, so that’s not saying all that much. Rust is still a low-level language by many standards.

So why choose Rust? Well, I’m picking it for two reasons:

- I love it.

- There aren’t a lot of kernels in it yet.

There are a suprising number of people working on kernels in Rust. But since it’s a newer language, there aren’t nearly as many as for older, more established languages.

Do I need to be a wizard?

No, you do not. A common theme of this project is “this is all we’ll need to know about this topic for now.” There’s no reason that you need to absolutely master everything before going forward. For example, in order to get Rust going, we need only about 100 lines of assembly, as mentioned above. Do you need to be a complete expert in assembly language to understand those well enough to keep going? Not at all. Will learning more about it help? Absolutely!

There’s nobody that’s monitoring your credentials to see if you’re allowed to move on. Do it at your own pace. Skip stuff. Come back when you don’t understand what’s going on. Try it, wait a week, and then try it again.

There’s no wrong way to do this stuff, including by being a beginner. Everyone was once. Don’t let anyone discourage you.

Setting up a development environment

Frankly, one of the hardest parts of starting an operating system is getting a development environment going. Normally, you’re doing work on the same operating system you’re developing for, and we don’t have that luxury. Yet!

There is a convention called a ‘target triple’ to describe a particular platform. It’s a ‘triple’ because it has three parts:

arch-kernel-userland

So, a target triple for a computer which has an x86-64 bit processor running a Linux kernel and the GNU userland would look like this:

x86_64-linux-gnu

However, it can also be useful to know the operating system as well, and so the ‘triple’ part can be extended to include it:

x86_64-unknown-linux-gnu

This is for some unknown Linux. If we were targeting Debian specifically, it would be:

x86_64-debian-linux-gnu

Since it’s four parts, it’s called a ‘target’ rather than a ‘target triple’, but you’ll still hear some people call it a triple anyway.

Kernels themselves don’t need to be for a specific userland, and so you’ll see ‘none’ get used:

x86_64-unknown-none

Hosts & Targets

The reason that they’re called a ‘target’ is that it’s the architecture you’re compiling to. The architecture you’re compiling from is called the ‘host architecture’.

If the target and the host are the same, we call it ‘compiling’. If they are different, we call it ‘cross-compiling’. So you’ll see people say things like

I cross-compiled from x86_64-linux-gnu to x86-unknown-none.

This means that the computer that the developer was using was a 64-bit GNU/Linux machine, but the final binary was for a 32-bit x86 machine with no OS.

So we need a slightly special environment to build our OS: we need to cross-compile from whatever kind of computer we are using to our new target.

Cheat codes

... but we can also cheat. It’s okay to cheat. Well, in this case, it’s really only okay at the start. We’ll eventually have to cross-compile, or things will go wrong.

Here’s the cheat: if you are developing on an x86_64 Linux machine, and you’re

not using any special Linux kernel features, then the difference between

x86_64-linux-gnu and x86_64-unknown-none is really just theoretical. It

will still technically work. For now.

This is a common pitfall with new operating system developers. They’ll start off with the cheat, and it will come back to haunt them later. Don’t worry; I will actually show you how to fix things before they go wrong. Knowing the difference here is still useful.

Installing Rust

First, you need to get a copy of Rust! There's one catch though: you'll need to get exactly the correct version of Rust. Unfortunately, for OS development, we need to take advantage of some cutting-edge features that aren't yet stable.

Luckily, the Rust project has a tool that makes it easy to switch between Rust

versions: rustup. You can get it from the install

page of the Rust website.

By default, rustup uses stable Rust. So let's tell it to install nightly:

$ rustup update nightly

This installs the current version of nightly Rust. We run all of the examples in this book under continuous integration, so we should know if something changes in nightly Rust and breaks. But please file bugs if something doesn't work.

Because nightly Rust includes unstable features, you shouldn't use it unless

you really need to, which is why rustup allows you to override the default

version only when you're in a particular directory. We don't have a directory

for our project yet, so let's create one:

$ mkdir intermezzOS

$ cd intermezzOS

A fun way to follow along is to pick a different name for your kernel, and then change it as we go. Call your kernel whatever you want. intermezzOS was almost called ‘Nucleus’, until I found out that there’s already a kernel with that name that’s installed on billions of embedded devices. Whoops!

Inside your project directory, set up the override:

$ rustup override add nightly

Nice and easy. We can't get the version wrong; rustup handles it for us.

Linux

Here are the tools we’re going to need:

nasmldgrub-mkrescue+xorrisoqemu

How to install the tools depends on your distribution.

On Debian you can install them with

$ sudo apt-get install nasm xorriso qemu build-essential

On Arch Linux you can install them with

$ sudo pacman -S --needed binutils grub mtools libisoburn nasm qemu

And on Fedora with

$ sudo dnf install nasm xorriso qemu

Note that if your Fedora is up-to-date enough you will need to call grub2-mkrescue command instead of grub-mkrescue.

Mac OS X

The tools you need are similar to the tools listed in the Linux instructions, but we will need to build a cross compiler in order to get things working. This is sort of complicated and boring, but we've done some of that boring work for you so you can get up and running quickly.

Make sure you have homebrew installed because you are going

to need it to install some of the tools. You are probably also going to need

Xcode if you don't already have

it. You may have to agree to the Xcode license before you use it. You can do

this by either opening Xcode and accepting the license agreement, or by running

sudo xcodebuild -license in the terminal, scrolling down to the bottom of the

license, and agreeing to it.

Download this script and read through it. There are a couple assumptions

that it makes about the paths where the source is downloaded to and where the

binaries are installed. You might want to change where those locations are.

When possible we try and use brew to install things, but there are some

programs we need to compile. The compiled special versions of the tools are

installed in ~/opt. This is so we don't clobber any version of them that you

may have already installed. The source code for these tools are downloaded in

~/src.

Here is what the script does:

brew installtools that it can likegmp,mpfr,libmpc,autoconf, andautomake- Download and compile tools in order to make a cross compiler:

binutils,gcc,objconv - Download and compile

grubusing the cross compiler

This might take some time to run.

After it is done you should have all the tools you need located in ~/opt. The

tools should be named the same as the tools used in other chapters, but they

might be prefixed with a x86_64-pc-elf-. The exception to this is grub. The

default path for the binaries installed using the above script is $HOME/opt/bin so adjust the PATH variable appropriately.

Windows

Windows 10

If you're using Windows 10, you can use Bash On Ubuntu on Windows to get going in an easy way.

Once you have installed Bash on Ubuntu on Windows, simply follow the Linux

instructions. You'll also need the grub-pc-bin package.

Finally, you'll need an "X server"; this will let us run intermezzOS in a graphical window. Any will do, but we've tried xming and it works well.

Finally, you'll need to run this:

$ export DISPLAY=:0

You can put it in your ~/.bashrc file to have it automatically work on each

session.

Other Windows Versions

I hope to have better instructions for Windows soon; since I don’t have a computer that runs it, I need to figure it out first. If you know how, this would be a great way to contribute.

Booting up

We’ve got some of the theory down, and we’ve got a development environment going. Let’s get down to actually writing some code, shall we?

Our first task is going to be the same as in any programming language: Hello world! It’s going to take a teeny bit more code than in many languages. For example, here’s “Hello, World!” in Ruby:

puts "Hello, world!"

Or in C:

#include<stdio.h>

int main(void) {

printf("Hello, world!");

}

But it’s not actually that much more work. It’s going to take us 28 lines to get there. And instead of a single command to build and run, like Ruby:

$ ruby hello_world.rb

It’s going to initially take us six commands to build and run our hello world kernel. Don’t worry, the next thing we’ll do is write a script to turn it back into a single command.

By the way, Appendix A has a list of solutions to common problems, if you end up getting stuck.

Multiboot headers

Let’s get going! The very first thing we’re going to do is create a ‘multiboot header’. What’s that, you ask? Well, to explain it, let’s take a small step back and talk about how a computer boots up.

One of the amazing and terrible things about the x86 architecture is that it’s maintained backwards compatibility throughout the years. This has been a competitive advantage, but it’s also meant that the boot process is largely a pile of hacks. Each time a new iteration comes out, a new step gets added to the process. That’s right, when your fancy new computer starts up, it thinks it’s an 8086 from 1976. And then, through a succession of steps, we transition through more and more modern architectures until we end at the latest and greatest.

The first mode is called ‘real mode’. This is a 16 bit mode that the original x86 chips used. The second is ‘protected mode’. This 32 bit mode adds new things on top of real mode. It’s called ‘protected’ because real mode sort of let you do whatever you wanted, even if it was a bad idea. Protected mode was the first time that the hardware enabled certain kinds of protections that allow us to exercise more control around such things as RAM. We’ll talk more about those details later.

The final mode is called ‘long mode’, and it’s 64 bits.

By the way...

Well, that’s actually a lie: there’s two. Initially, you’re not in long mode, you’re in ‘compatibility mode’. You see, when the industry was undergoing the transition from 32 to 64 bits, there were two options: the first was Intel’s Itanium 64-bit architecture. It did away with all of the stuff I just told you about. But that meant that programs had to be completely recompiled from scratch for the new chips. Intel’s big competitor, AMD, saw an opportunity here, and released a new set of chips called amd64. These chips were backwards compatible, and so you could run both 32 and 64 bit programs on them. Itanium wasn’t compelling enough to make the pain worth it, and so Intel released new chips that were compatible with amd64. The resulting architecture was then called x86_64, the one we’re using today. The moral of the story? Intel tried to save you from all of the stuff we’re about to do, but they failed. So we have to do it.

So that’s the task ahead of us: make the jump up the ladder and get to long mode. We can do it! Let’s talk more details.

Firmware and the BIOS

So let's begin by turning the power to our computer on.

When we press the power button, electricity starts running, and a special piece of software, known as the BIOS in Intel land, automatically runs.

With the BIOS we're already in the land of software, but unlike software that you may be used to writing, the BIOS comes bundled with its computer and is located in read-only memory (ROM). While changing or updating stuff in ROM is possible, it's not something you can do by invoking your favorite package manager or by downloading something from some website. In fact some ROM is literally hardwired into the computer and cannot be changed without physically swapping it out. This makes sense here. The BIOS and the computer are lifetime partners. Their existence doesn't make much sense without each other.

One of the first things the BIOS does is run a ‘POST’ or power-on self-test which checks for the availability and integrity of all the pieces of hardware that the computer needs including the BIOS itself, CPU registers, RAM, etc. If you've ever heard a computer beeping at you as it boots up, that's the POST reporting its findings.

Assuming no problems are found, the BIOS starts the real booting process.

By the way...

For a while now most commercial computer manufacturers have hidden their BIOS booting process behind some sort of splash screen. It's usually possible to see the BIOS' logs by pressing some collection of keys when your computer is starting up.

The BIOS also has a menu where you can see information about the computer like CPU and memory specs and all the hardware the BIOS detected like hard drives and CD and DVD drives. Typically this menu is accessed by pressing some other weird collection of keyboard keys while the computer is attempting to boot.

The BIOS automatically finds a ‘bootable drive’ by looking in certain pre-determined places like the computer's hard drive and CD and DVD drives. A drive is ‘bootable’ if it contains software that can finish the booting process. In the BIOS menu you can usually change in what order the BIOS looks for bootable drives or tell it to boot from a specific drive.

The BIOS knows it's found a bootable drive by looking at the first few kilobytes of the drive and looking for some magical numbers set in that drive's memory. This won't be the last time some magical numbers or hacky sounding things are used on our way to building an OS. Such is life at such a low level...

When the BIOS has found its bootable drive, it loads part of the drive into memory and transfers execution to it. With this process, we move away from what comes dictated by the computer manufacturer and move ever closer to getting our OS running.

Bootloaders

The part of our bootable drive that gets executed is called a ‘bootloader’, since it loads things at boot time. The bootloader’s job is to take our kernel, put it into memory, and then transition control to it.

Some people start their operating systems journey by writing a bootloader. We will not be doing that. Frankly, this whole startup process is more of an exercise in reading manuals and understanding the history of esoteric hardware than it is anything else. That stuff may interest you, and maybe someday we’ll come back and write a bootloader of our own.

In the interest of actually getting around to implementing a kernel, instead, we’ll use an existing bootloader: GRUB.

GRUB and Multiboot

GRUB stands for ‘grand unified bootloader’, and it’s a common one for GNU/Linux systems. GRUB implements a specification called Multiboot, which is a set of conventions for how a kernel should get loaded into memory. By following the Multiboot specification, we can let GRUB load our kernel.

The way that we do this is through a ‘header’. We’ll put some information in a format that multiboot specifies right at the start of our kernel. GRUB will read this information, and follow it to do the right thing.

One other advantage of using GRUB: it will handle the transition from real mode to protected mode for us, skipping the first step. We don’t even need to know anything about all of that old stuff. If you’re curious about the kinds of things you would have needed to know, put “A20 line” into your favorite search engine, and get ready to cry yourself to sleep.

Writing our own Multiboot header

I said we were gonna get to the code, and then I went on about more history.

Sorry about that! It’s code time for real! Inside your project directory, make

a new file called multiboot_header.asm, and open it in your favorite editor.

I use vim, but you should feel free to use anything you’d like.

$ touch multiboot_header.asm

$ vim multiboot_header.asm

Two notes about this: first of all, we’re just making this source file in the top level. Don’t worry, we’ll clean house later. Remember: we’re going to build stuff, and then abstract it afterwards. It’s easier to start with a mess and clean it up than it is to try to get it perfect on the first try.

Second, this is a .asm file, which is short for ‘assembly’. That’s right, we’re

going to write some assembly code here. Don’t worry! It’s not super hard.

An aside about assembly

Have you ever watched Rich Hickey’s talk “Simple vs. Easy”? It’s a wonderful talk. In it, he draws a distinction between these two words, which are commonly used as synonyms.

TODO https://github.com/intermezzOS/book/issues/27

Assembly coding is simple, but that doesn’t mean that it’s easy. We’ll be doing a little bit of assembly programming to build our operating system, but we don’t need to know that much. It is completely learnable, even for someone coming from a high-level language. You might need to practice a bit, and take it slow, but I believe in you. You’ve got this.

The Magic Number

Our first assembly file will be almost entirely data, not code. Here’s the first line:

dd 0xe85250d6 ; magic number

Ugh! Gibberish! Let’s start with the semicolon (;). It’s a comment, that

lasts until the end of the line. This particular comment says ‘magic number’.

As we said, you’ll be seeing a lot of magic numbers in your operating system work.

The idea of a magic number is that it’s completely and utterly arbitrary. It

doesn’t mean anything. It’s just magic. The very first thing that the multiboot

specification requires is that we have the magic number 0xe85250d6 right

at the start.

By the way...

Wondering how a number can have letters inside of it?

0xe85250d6is written in hexadecimal notation. Hexadecimal is an example of a "numeral system" which is a fancy term for a system for conveying numbers. The numeral system you're probably most familiar with is the decimal system which conveys numbers using a combination of the symbols0-9. Hexadecimal on the other hand uses a combination of 16 symbols:0-9anda-f. Along with its fellow numeral system, binary, hexadecimal is used a lot in low level programming. In order to tell if a number is written in hexadecimal, you may be tempted to look for the use of letters in the number, but a more surefire way is to look for a leading0x. While100isn't a hexadecimal number,0x100is. To learn more about hexadecimal and binary check this out.

What’s the value in having an arbitrary number there? Well, it’s a kind of safeguard against bad things happening. This is one of the ways in which we can check that we actually have a real multiboot header. If it doesn’t have the magic number, something has gone wrong, and we can throw an error.

I have no idea why it’s 0xe85250d6, and I don’t need to care. It just is.

Finally, the dd. It’s short for ‘define double word’. It declares that we’re

going to stick some 32-bit data at this location. Remember, when x86 first started,

it was a 16-bit architecture set. That meant that the amount of data that could be

held in a CPU register (or one ‘word’ as it's commonly known) was 16 bits.

To transition to a 32-bit architecture without losing backwards compatibility,

x86 got the concept of a ‘double word’ or double 16 bits.

The mode code

Okay, time to add a second line:

dd 0xe85250d6 ; magic number

dd 0 ; protected mode code

This is another form of magic number. We want to boot into protected mode, and

so we put a zero here, using dd again. If we wanted GRUB to do something

else, we could look up another code, but this is the one that we want.

Header length

The next thing that’s required is a header length. We could use dd and count

out exactly how many bytes that our header is, but there’s two reasons why

we’re not doing that:

- Computers should do math, not people.

- We’re going to add more stuff, and we’d have to recalculate this number each time. Or wait until the end and come back. See #1.

Here’s what this looks like:

header_start:

dd 0xe85250d6 ; magic number

dd 0 ; protected mode code

dd header_end - header_start ; header length

header_end:

You don’t have to align the comments if you don’t want to. I usually don’t, but it looks nice and after we’re done with this file, we’re not going to mess with it again, so we won’t be constantly re-aligning them in the future.

The header_start: and header_end: things are called ‘labels’. Labels let

us use a name to refer to a particular part of our code. Labels also refer to the

memory occupied by the data and code which directly follows it. So in our code above

the label header_start points directly to the memory at the very beginning of our

magic number and thus to the very beginning of our header.

Our third dd line uses those two labels to do some math: the header length is

the value of header_end minus the value of header_start. Because header_start

and header_end are just the addresses of places in memory, we can simply subtract

to get the distance between those two addresses. When we compile this assembly

code, the assembler will do this calculation for us. No need to figure out

how many bytes there are by hand. Awesome.

You’ll also notice that I indented the dd statements. Usually, labels go in

the first column, and you indent actual instructions. How much you indent is up

to you; it’s a pretty flexible format.

The Checksum

The fourth field multiboot requires is a ‘checksum’. The idea is that we sum up some numbers, and then use that number to check that they’re all what we expected things to be. It’s similar to a hash, in this sense: it lets us and GRUB double-check that everything is accurate.

Here’s the checksum:

header_start:

dd 0xe85250d6 ; magic number

dd 0 ; protected mode code

dd header_end - header_start ; header length

; checksum

dd 0x100000000 - (0xe85250d6 + 0 + (header_end - header_start))

header_end:

Again, we’ll use math to let the computer calculate the sum for us. We add up

the magic number, the mode code, and the header length, and then subtract it

from a big number. dd then puts that value into this spot in our file.

By the way...

You might wonder why we're subtracting these values from 0x100000000. To answer this we can look at what the multiboot spec says about the checksum value in the header:

The field

checksumis a 32-bit unsigned value which, when added to the other magic fields (i.e.magic,architectureandheader_length), must have a 32-bit unsigned sum of zero.In other words:

checksum+magic_number+architecture+header_length= 0We could try and "solve for"

checksumlike so:

checksum= -(magic_number+architecture+header_length)But here's where it gets weird. Computers don't have an innate concept of negative numbers. Normally we get around this by using "signed integers", which is something we cover in an appendix. The point is we have an unsigned integer here, which means we're limited to representing only positive numbers. This means we can't literally represent -(

magic_number+architecture+header_length) in our field.If you look closely at the spec you'll notice it's strangely worded: it's asking for a value that when added to other values has a sum of zero. It's worded this way because integers have a limit to the size of numbers they can represent, and when you go over that size, the values wrap back around to zero. So 0xFFFFFFFF + 1 is.... 0x00000000. This is a hardware limitation: technically it's doing the addition correctly, giving us the 33-bit value 0x100000000, but we only have 32 bits to store things in so it can't actually tell us about that

1in the most significant digit position! We're left with the rest of the digits, which spell out zero.So what we can do here is "trick" the computer into giving us zero when we do the addition. Imagine for the sake of argument that

magic_number+architecture+header_lengthsomehow works out to be 0xFFFFFFFE. The number we'd add to that in order to make 0 would be 0x00000002. This is 0x100000000-0xFFFFFFFE, because 0x100000000 technically maps to 0 when we wrap around. So we replace 0xFFFFFFFE in our contrived example here withmagic_number+architecture+header_length. This gives us:dd 0x100000000 - (0xe85250d6 + 0 + (header_end - header_start))

Ending tag

After the checksum you can list a series of “tags”, which is a way for the OS to tell the bootloader to do some extra things before handing control over to the OS, or to give the OS some extra information once started. We donʼt need any of that yet, though, so we just need to include the required “end tag”, which looks like this:

header_start:

dd 0xe85250d6 ; magic number

dd 0 ; protected mode code

dd header_end - header_start ; header length

; checksum

dd 0x100000000 - (0xe85250d6 + 0 + (header_end - header_start))

; required end tag

dw 0 ; type

dw 0 ; flags

dd 8 ; size

header_end:

Here we use dw to define a ‘word’ instead of just data. Remember a ‘word’ is 16

bits or 2 bytes on the x86_64 architecture. The multiboot specification demands

that this be exactly a word. You’ll find that this is super common in operating systems:

the exact size and amount of everything matters. It’s just a side-effect of

working at a low level.

The Section

We have one last thing to do: add a ‘section’ annotation. We’ll talk more about sections later, so for now, just put what I tell you at the top of the file.

Here’s the final file:

section .multiboot_header

header_start:

dd 0xe85250d6 ; magic number

dd 0 ; protected mode code

dd header_end - header_start ; header length

; checksum

dd 0x100000000 - (0xe85250d6 + 0 + (header_end - header_start))

; required end tag

dw 0 ; type

dw 0 ; flags

dd 8 ; size

header_end:

That’s it! Congrats, you’ve written a multiboot compliant header. It’s a lot of esoterica, but it’s pretty straightforward once you’ve seen it a few times.

Assembling with nasm

We can’t use this file directly, we need to turn it into binary. We can use a program called an ‘assembler’ to ‘assemble’ our assembly code into binary code. It’s very similar to using a ‘compiler’ to ‘compile’ our source code into binary. But when it’s assembly, people often use the more specific name.

We will be using an assembler called nasm to do this. You should invoke

nasm like this:

$ nasm -f elf64 multiboot_header.asm

The -f elf64 says that we want to output a file using the elf64 file

format. ELF is a particular executable format that’s used by various UNIX

systems, and we’ll be using it too. The executable format just specifies how

exactly the bits will be laid out in the file. For example, will there be a

magic number at the beginning of the file for easier error checking? Or where in

the file do we specify whether our code and data is in a 32-bit or 64-bit

format? There are other formats, but ELF is pretty good.

After you run this command, you should see a multiboot_header.o file in

the same directory. This is our ‘object file’, hence the .o. Don't let the

word ‘object’ confuse you. It has nothing to do with anything object oriented.

‘Object files’ are just binary code with some metadata in a particular format -

in our case ELF. Later, we’ll take this file and use it to build our OS.

Summary

Congratulations! This is the first step towards building an operating system.

We learned about the boot process, the GRUB bootloader, and the Multiboot

specification. We wrote a Multiboot-compliant header file in assembly code, and

used nasm to create an object file from it.

Next, we’ll write the actual code that prints “Hello world” to the screen.

Hello, world!

Now that we’ve got the headers out of the way, let’s do the traditional first program: Hello, world!

The smallest kernel

Our hello world will be just 20 lines of assembly code. Let’s begin.

Open a file called boot.asm and put this in it:

start:

hlt

You’ve seen the name: form before: it’s a label. This lets us name a line

of code. We’ll call this label start, which is the traditional name.

GRUB will use this convention to know where to begin.

The hlt statement is our first bit of ‘real’ assembly. So far, we had just

been declaring data. This is actual, executable code. It’s short for ‘halt’.

In other words, it ends the program.

By giving this line a label, we can call it, sort of like a function. That’s what

GRUB does: “Call the function named start.” This function has just one

line: stop.

Unlike many other languages, you’ll notice that there’s no way to say if this ‘function’ takes any arguments or not. We’ll talk more about that later.

This code won’t quite work on its own though. We need to do a little bit more bookkeeping first. Here’s the next few lines:

global start

section .text

bits 32

start:

hlt

Three new bits of information. The first:

global start

This says “I’m going to define a label start, and I want it to be available

outside of this file.” If we don’t say this, GRUB won’t know where to find its

definition. You can kind of think of it like a ‘public’ annotation in other

languages.

section .text

We saw section briefly, but I told you we’d get to it later. The place where

we get to it is at the end of this chapter. For the moment, all you need to

know is this: code goes into a section named .text. Everything that comes

after the section line is in that section, until another section line.

bits 32

GRUB will boot us into protected mode, aka 32-bit mode. So we have to specify that directly. Our Hello World will only be in 32 bits. We’ll transition from 32-bit mode to 64-bit mode in the next chapter, but it’s a bit involved. So let’s just stay in protected mode for now.

That’s it! We could theoretically stop here, but instead, let’s actually print the “Hello world” text to the screen. We’ll start off with an ‘H’:

global start

section .text

bits 32

start:

mov word [0xb8000], 0x0248 ; H

hlt

This new line is the most complicated bit of assembly we’ve seen yet. There’s a lot packed into this little line.

The first important bit is mov. This is short for move, and it sorta looks

like this:

mov size place, thing

Oh, ; starts a comment, remember? So the ; H is just for us. I put this

comment here because this line prints an H to the screen!

Yup, it does. Okay, so here’s why: mov copies thing into place. The amount

of stuff it copies is determined by size.

; size place thing

; | | |

; V V V

mov word [0xb8000], 0x0248 ; H

“Copy one word: the number 0x0248 to ... some place.

The place looks like a number just like 0x0248, but it has square

brackets [] around it. Those brackets are special. They mean “the address

in memory located by this number.” In other words, we’re copying the number

0x0248 into the specific memory location 0xb8000. That’s what this line does.

Why? Well, we’re using the screen as a “memory mapped” device. Specific

positions in memory correspond to certain positions on the screen. And

the position 0xb8000 is one of those positions: the upper-left corner of the

screen.

By the way...

"Memory mapping" is one of the fundamental techniques used in computer engineering to help the CPU know how to talk to all the different physical components of a computer. The CPU itself is just a weird little machine that moves numbers around. It's not of any use to humans on its own: it needs to be connected to devices like RAM, hard drives, a monitor, and a keyboard. The way the CPU does this is through a bus, which is a huge pipeline of wires connecting the CPU to every single device that might have data the CPU needs. There's one wire per bit (since a wire can store a 1 or a 0 at any given time). A 32-bit bus is literally 32 wires in parallel that run from the CPU to a bunch of devices like Christmas lights around a house.

There are two buses that we really care about in a computer: the address bus and the data bus. There's also a third signal that lets all the devices know whether the CPU is requesting data from an input (reading, like from the keyboard) or sending data to an output (writing, like to the monitor via the video card). The address bus is for the CPU to send location information, and the data bus is for the CPU to either write data to or read data from that location. Every device on the computer has a unique hard coded numerical location, or "address", literally determined by how the thing is wired up at the factory. In the case of an input/read operation, when it sends

0x1001A003out on the address bus and the control signal notifies every device that it's a read operation, it's asking, "What is the data currently stored at location0x1001A003?" If the keyboard happens to be identified by that particular address, and the user is pressing SPACE at this time, the keyboard says, "Oh, you're talking to me!" and sends back the ASCII code0x00000020(for "SPACE") on the data bus.What this means is that memory on a computer isn't just representing things like RAM and your hard drive. Actual human-scale devices like the keyboard and mouse and video card have their own memory locations too. But instead of writing a byte to a hard drive for storage, the CPU might write a byte representing some color and symbol to the monitor for display. There's an industry standard somewhere that says video memory must live in the address range beginning

0xb8000. In order for computers to be able to work out of the box, this means that the BIOS needs to be manufactured to assume video lives at that location, and the motherboard (which is where the bus is all wired up) has to be manufactured to route a0xb8000request to the video card. It's kind of amazing this stuff works at all! Anyway, "memory mapped hardware", or "memory mapping" for short, is the name of this technique.

Now, we are copying 0x0248. Why this number? Well, it’s in three parts:

__ background color

/ __foreground color

| /

V V

0 2 48 <- letter, in ASCII

We’ll start at the right. First, two numbers are the letter, in ASCII. H is

72 in ASCII, and 48 is 72 in hexadecimal: (4 * 16) + 8 = 72. So this will

write H.

The other two numbers are colors. There are 16 colors available, each with a number. Here’s the table:

| Value | Color |

|-------|----------------|

| 0x0 | black |

| 0x1 | blue |

| 0x2 | green |

| 0x3 | cyan |

| 0x4 | red |

| 0x5 | magenta |

| 0x6 | brown |

| 0x7 | gray |

| 0x8 | dark gray |

| 0x9 | bright blue |

| 0xA | bright green |

| 0xB | bright cyan |

| 0xC | bright red |

| 0xD | bright magenta |

| 0xE | yellow |

| 0xF | white |

So, 02 is a black background with a green foreground. Classic. Feel free to

change this up, use whatever combination of colors you want!

So this gives us a H in green, over black. Next letter: e.

global start

section .text

bits 32

start:

mov word [0xb8000], 0x0248 ; H

mov word [0xb8002], 0x0265 ; e

hlt

Lower case e is 65 in ASCII, at least, in hexadecimal. And 02 is our same

color code. But you’ll notice that the memory location is different.

Okay, so we copied four hexadecimal digits into memory, right? For our H.

0248. A hexadecimal digit has sixteen values, which is 4 bits (for example, 0xf

would be represented in bits as 1111). Two of them make 8 bits, i.e. one byte.

Since we need half a word for the colors (02), and half a word for the H (48),

that’s one word in total (or two bytes). Each place that the memory address points

to can hold one byte (a.k.a. 8 bits or half a word). Hence, if our first memory

position is at 0, the second letter will start at 2.

You might be wondering, "If we're in 32 bit mode, isn't a word 32 bits?" since sometimes ‘word’ is used to talk about native CPU register size. Well, the ‘word’ keyword in the context of x86_64 assembly specifically refers to 2 bytes, or 16 bits of data. This is for reasons of backwards compatibility.

This math gets easier the more often you do it. And we won’t be doing that much more of it. There is a lot of working with hex numbers in operating systems work, so you’ll get better as we practice.

With this, you should be able to get the rest of Hello, World. Go ahead and try if you want: each letter needs to bump the location twice, and you need to look up the letter’s number in hex.

If you don’t want to bother with all that, here’s the final code:

global start

section .text

bits 32

start:

mov word [0xb8000], 0x0248 ; H

mov word [0xb8002], 0x0265 ; e

mov word [0xb8004], 0x026c ; l

mov word [0xb8006], 0x026c ; l

mov word [0xb8008], 0x026f ; o

mov word [0xb800a], 0x022c ; ,

mov word [0xb800c], 0x0220 ;

mov word [0xb800e], 0x0277 ; w

mov word [0xb8010], 0x026f ; o

mov word [0xb8012], 0x0272 ; r

mov word [0xb8014], 0x026c ; l

mov word [0xb8016], 0x0264 ; d

mov word [0xb8018], 0x0221 ; !

hlt

Finally, now that we’ve got all of the code working, we can assemble our

boot.asm file with nasm, just like we did with the multiboot_header.asm

file:

$ nasm -f elf64 boot.asm

This will produce a boot.o file. We’re almost ready to go!

Linking it together

Okay! So we have two different .o files: multiboot_header.o and boot.o.

But what we need is one file with both of them. Our OS doesn’t have the

ability to do anything yet, let alone load itself in two parts somehow. We just

want one big binary file.

Enter ‘linking’. If you haven’t worked in a compiled language before, you probably haven’t had to deal with linking before. Linking is how we’ll turn these two files into a single output: by linking them together.

Open up a file called linker.ldand put this in it:

ENTRY(start)

SECTIONS {

. = 1M;

.boot :

{

/* ensure that the multiboot header is at the beginning */

*(.multiboot_header)

}

.text :

{

*(.text)

}

}

This is a ‘linker script’. It controls how our linker will combine these files into the final output. Let’s take it bit-by-bit:

ENTRY(start)

This sets the ‘entry point’ for this executable. In our case, we called our

entry point by the name people use: start. Remember? In boot.asm? Same

name here.

SECTIONS {

Okay! I’ve been promising you that we’d talk about sections. Everything inside of these curly braces is a section. We annotated parts of our code with sections earlier, and here, in this part of the linker script, we will describe each section by name and where it goes in the resulting output.

. = 1M;

This line means that we will start putting sections at the one megabyte mark. This is the conventional place to put a kernel, at least to start. Below one megabyte is all kinds of memory-mapped stuff. Remember the VGA stuff? It wouldn’t work if we mapped our kernel’s code to that part of memory... garbage on the screen!

.boot :

This will create a section named boot. And inside of it...

*(.multiboot_header)

... goes every section named multiboot_header. Remember how we defined that

section in multiboot_header.asm? It’ll be here, at the start of the boot

section. That’s what we need for GRUB to see it.

.text :

Next, we define a text section. The text section is where you put code.

And inside of it...

*(.text)

... goes every section named .text. See how this is working? The syntax is a

bit weird, but it’s not too bad.

That’s it for our script! We can then use ld to link all of this stuff

together:

$ ld --nmagic --output=kernel.bin --script=linker.ld multiboot_header.o boot.o

Recall that on Mac OS X you will want to use the linker we installed to

~/opt and not your system linker. For example, if you did not change any of

the defaults in the installation script, this linker will be located at

$HOME/opt/bin/x86_64-pc-elf-ld.

By running this command, we do a few things:

--nmagic

TODO: https://github.com/intermezzOS/book/issues/30

--output=kernel.bin

This sets the name of our output file. In our case, that’s kernel.bin. We’ll be using

this file in the next step. It’s our whole kernel!

--script=linker.ld

This is the linker script we just made.

multiboot_header.o boot.o

Finally, we pass all the .o files we want to link together.

That’s it! We’ve now got our kernel in the kernel.bin file. Next, we’re going to

make an ISO out of it, so that we can load it up in QEMU.

Making an ISO

Now that we have our kernel.bin, the next step is to make an ISO. Remember

compact discs? Well, by making an ISO file, we can both test our Hello World

kernel in QEMU, as well as running it on actual hardware!

To do this, we’re going to use a GRUB tool called grub-mkrescue. We have to

create a certain structure of files on disk, run the tool, and we’ll get an

os.iso file at the end.

Doing so is not very much work, but we need to make the files in the right places. First, we need to make three directories:

$ mkdir -p isofiles/boot/grub

The -p flag to mkdir will make the directory we specify, as well as any

‘parent’ directories, hence the p. In other words, this will make an

isofiles directory, with a boot directory inside, and a grub directory

inside of that.

Next, create a grub.cfg file inside of that isofiles/boot/grub directory,

and put this in it:

set timeout=0

set default=0

menuentry "intermezzOS" {

multiboot2 /boot/kernel.bin

boot

}

This file configures GRUB. Let’s talk about the menuentry block first.

GRUB lets us load up multiple different operating systems, and it usually does

this by displaying a menu of OS choices to the user when the machine boots. Each

menuentry section corresponds to one of these. We give it a name, in this

case, intermezzOS, and then a little script to tell it what to do. First,

we use the multiboot2 command to point at our kernel file. In this case,

that location is /boot/kernel.bin. Remember how we made a boot directory

inside of isofiles? Since we’re making the ISO out of the isofiles directory,

everything inside of it is at the root of our ISO. Hence /boot.

Let’s copy our kernel.bin file there now:

$ cp kernel.bin isofiles/boot/

Finally, the boot command says “that’s all the configuration we need to do,

boot it up.“

But what about those timeout and default settings? Well, the default setting

controls which menuentry we want to be the default. The numbers start at zero,

and since we only have that one, we set it as the default. When GRUB starts, it

will wait for timeout seconds, and then choose the default option if the user

didn’t pick a different one. Since we only have one option here, we just set it to

zero, so it will start up right away.

The final layout should look like this:

isofiles/

└── boot

├── grub

│ └── grub.cfg

└── kernel.bin

Using grub-mkrescue is easy. We run this command:

$ grub-mkrescue -o os.iso isofiles

The -o flag controls the output filename, which we choose to be os.iso.

And then we pass it the directory to make the ISO out of, which is the

isofiles directory we just set up.

After this, you have an os.iso file with our teeny kernel on it. You could

burn this to a USB stick or CD and run it on an actual computer if you wanted

to! But doing so would be really annoying during development. So in the next

section, we’ll use an emulator, QEMU, to run the ISO file on our development

machine.

Running in QEMU

Let’s actually run our kernel! To do this, we’ll use QEMU, a full-system emulator. Using QEMU is fairly straightfoward:

$ qemu-system-x86_64 -cdrom os.iso

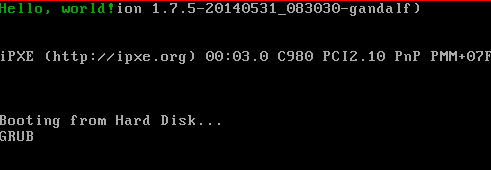

Type it in, hit Enter, and you should see Hello, world! (To exit, hit

Alt+2 and type quit in the console.)

If it shows up for you too, congrats! If not, something may have gone wrong. Double check that you followed the examples exactly. Maybe you missed something, or made a mistake while copying things down.

Note all of this other stuff behind the Hello World message: this part may look different, based on your version of GRUB, and also since we didn’t clear the screen, everything from GRUB just stays as it is. We’ll write a function to do that eventually...

Let’s talk about this command before we move on:

qemu-system-x86_64

We’re running the x86_64 variant of QEMU. While we have a 32-bit kernel for

now, soon we’ll have a 64-bit one. And since things are backwards compatible,

this works just fine.

-cdrom os.iso

We’re going to start QEMU with a CD-ROM drive, and its contents are the

os.iso file we made.

That’s it! Here’s the thing, though: while that wasn’t too complicated, it was a lot of steps. Each time we make a change, we have to go through all these steps over again. In the next section, we’ll use Make to do all these steps for us.

Automation with Make

Typing all of these commands out every time we want to build the project is

tiring and error-prone. It’s nice to be able to have a single command that

builds our entire project. To do this, we’ll use make. Make is a classic

bit of software that’s used for this purpose. At its core, make is fairly

simple:

- You create a file called

Makefile. - In this file, you define rules. Rules are composed of three things: targets, prerequisites, and commands.

- Targets describe what you are trying to build.

- Targets can depend on other targets being built before they can be built. These are called ‘prerequisites’.

- Commands describe what it takes to actually build the target.

Let’s start off with a very straightforward rule. Specifically, the first step

that we did was to build the Multiboot header by running nasm. Let’s build a

Makefile that does this. Open a file called Makefile and put this in it:

multiboot_header.o: multiboot_header.asm

nasm -f elf64 multiboot_header.asm

It’s very important that that nasm line uses a tab to indent. It can’t be

spaces. It has to be a tab. Yay legacy software!

Let’s try to run it before we talk about the details:

$ make

nasm -f elf64 multiboot_header.asm

$

If you see this output, success! Let’s talk about this syntax:

target: prerequisites

command

The bit before the colon is called a ‘target’. That’s the thing we’re trying to

build. In this case, we want to create the multiboot_header.o file, so we name

our target after that.

After the colon comes the ‘prerequisites’. This is a list of other targets that must

be built for this target to be built. In this case, building multiboot_header.o

requires that we have a multiboot_header.asm. We have no rule describing how

to build this file but it existing is enough to satisfy the dependency.

Finally, on the next line, and indented by a tab, we have a ‘command’. This is the shell command that you need to build the target.

Building boot.o is similar:

multiboot_header.o: multiboot_header.asm

nasm -f elf64 multiboot_header.asm

boot.o: boot.asm

nasm -f elf64 boot.asm

Let’s try to build it:

$ make

make: ‘multiboot_header.o’ is up to date.

$

Wait a minute, what? There’s two things going on here. The first is that make will build

the first target that you list by default. So a simple make will not build boot.o. To

build it, we can pass make the target name:

$ make boot.o

nasm -f elf64 boot.asm

Okay, so that worked. But what about this ‘is up to date’ bit?

By default, make will keep track of the last time you built a particular

target, and check the prerequisites’ last-modified-time against that time. If

the prerequisites haven’t been updated since the target was last built, then it

won’t re-execute the build command. This is a really powerful feature,

especially as we grow. You don’t want to force the entire project to re-build

just because you edited one file; it’s nicer to only re-build the bits that

interact with it directly. A lot of the skill of make is defining the right

targets to make this work out nicely.

It would be nice if we could build both things with one command, but as it

turns out, our next target, kernel.bin, relies on both of these .o files,

so let’s write it first:

multiboot_header.o: multiboot_header.asm

nasm -f elf64 multiboot_header.asm

boot.o: boot.asm

nasm -f elf64 boot.asm

kernel.bin: multiboot_header.o boot.o linker.ld

ld -n -o kernel.bin -T linker.ld multiboot_header.o boot.o

Let’s try building it:

$ make kernel.bin

ld -n -o kernel.bin -T linker.ld multiboot_header.o boot.o

Great! The kernel.bin target depends on multiboot_header.o, boot.o, and linker.ld. The

first two are the previous targets we defined, and linker.ld is a file on its own.

Let’s make make build the whole thing by default:

default: kernel.bin

multiboot_header.o: multiboot_header.asm

nasm -f elf64 multiboot_header.asm

boot.o: boot.asm

nasm -f elf64 boot.asm

kernel.bin: multiboot_header.o boot.o linker.ld

ld -n -o kernel.bin -T linker.ld multiboot_header.o boot.o

We can name targets whatever we want. In this case, default is a good

convention for the first rule, as it’s the default target. It relies on

the kernel.bin target, which means that we’ll build it, and as we previously

discussed, kernel.bin will build our two .os.

Let’s try it out:

$ make

make: Nothing to be done for ‘default’.

We haven’t edited our files, so everything is built. Let’s modify one. Open up

multiboot_header.asm in your editor, save it, and then run make:

$ make

nasm -f elf64 multiboot_header.asm

ld -n -o kernel.bin -T linker.ld multiboot_header.o boot.o

It re-built multiboot_header.o, and then kernel.bin. But it didn’t rebuild

boot.o, as we didn’t modify it at all.

Let’s add a new rule to build our iso. Rather than show the entire Makefile, I’m

going to start showing you what’s changed. First, we have to update our default

target, and then we have to write the new one:

default: os.iso

os.iso: kernel.bin grub.cfg

mkdir -p isofiles/boot/grub

cp grub.cfg isofiles/boot/grub

cp kernel.bin isofiles/boot/

grub-mkrescue -o os.iso isofiles

This is our first multi-command rule. make will execute all of the commands

that you list. In this case, to build the ISO, we need to create our isofiles

directory, and then copy grub.cfg and kernel.bin into the right place

inside of it. Finally, grub-mkrescue builds the ISO from that directory.

This rule assumes that grub.cfg is at our top-level directory, but it’s

currently in isofiles/boot/grub already. So let’s copy it out:

$ cp isofiles/boot/grub/grub.cfg .

And now we can build:

$ make

mkdir -p isofiles/boot/grub

cp grub.cfg isofiles/boot/grub

cp kernel.bin isofiles/boot/

grub-mkrescue -o os.iso isofiles

Sometimes, it’s nice to add targets which describe a semantic. In this case, building

the os.iso target is the same as building the project. So let’s say so:

default: build

build: os.iso

The default action is to build the project, and to build the project, we need to build

os.iso. But what about running it? Let’s add a rule for that:

default: run

run: os.iso

qemu-system-x86_64 -cdrom os.iso

You can choose the default here: do you want the default to be build, or run? Here’s what each looks like:

$ make # build is the default

$ make run

or

$ make # run is the default

$ make build

I prefer to make run the default.

Finally, there’s another useful common rule: clean. The clean rule should remove all

of the generated files, and allow us to do a full re-build. As such it’s a bunch of rm

statements:

clean:

rm -f multiboot_header.o

rm -f boot.o

rm -f kernel.bin

rm -rf isofiles

rm -f os.iso

Now there's just one more wrinkle. We have four targets that aren't really files

on disk, they are just actions: default, build, run and clean. Remember

we said earlier that make decides whether or not to execute a command by

comparing the last time a target was built with the last-modified-time of its

prerequisites? Well, it determines the last time a target was built by looking

at the last-modified-time of the target file. If the target file doesn't exist,

then it's definitely out-of-date so the command will be run.

But what if we accidentally create a file called clean? It doesn't have any

prerequisites so it will always be up-to-date and the commands will never be

run! We need a way to tell make that this is a special target, it isn't really

a file on disk, it's an action that should always be executed. We can do this

with a magic built-in target called .PHONY:

.PHONY: default build run clean

Here’s our final Makefile:

default: run

.PHONY: default build run clean

multiboot_header.o: multiboot_header.asm

nasm -f elf64 multiboot_header.asm

boot.o: boot.asm

nasm -f elf64 boot.asm

kernel.bin: multiboot_header.o boot.o linker.ld

ld -n -o kernel.bin -T linker.ld multiboot_header.o boot.o

os.iso: kernel.bin grub.cfg

mkdir -p isofiles/boot/grub

cp grub.cfg isofiles/boot/grub

cp kernel.bin isofiles/boot/

grub-mkrescue -o os.iso isofiles

build: os.iso

run: os.iso

qemu-system-x86_64 -cdrom os.iso

clean:

rm -f multiboot_header.o

rm -f boot.o

rm -f kernel.bin

rm -rf isofiles

rm -f os.iso

You'll notice that there is a fair amount of repetition here. At first, that's pretty okay: make can be a bit hard to understand, and while it has features that let you de-duplicate things, they can also get unreadable really fast.

Creating a build subdirectory

Here's one example of a tweak we can do: nasm supports a -o flag, which

controls the name of the output file. We can use this to build everything in

a build subdirectory. This is nice for a number of reasons, but one of the

simplest is that all of our generated files will go in a single directory,

which means that it’s much easier to keep track of them: they’ll all be in one

place.

Let’s make some changes: More specifically, three of them:

build/multiboot_header.o: multiboot_header.asm

mkdir -p build

nasm -f elf64 multiboot_header.asm -o build/multiboot_header.o

The first one is the name of the rule. We have to add a build/ in front of

the filename. This is because we’re going to be putting this file in that

directory now.

Second, we added another line: mkdir. We used -p to make directories

before, but in this case, the purpose of the flag is to not throw an error

if the directory already exists. We need to try to make this directory

when we build so that we can put our .o file in it!

Finally, we add the -o flag to nasm. This will create our output file in

that build directory, rather than in the current one.

With that, we’re ready to modify boot.o as well:

build/boot.o: boot.asm

mkdir -p build

nasm -f elf64 boot.asm -o build/boot.o

These changes are the same, just with boot instead of multiboot_header.

Next up: kernel.bin:

build/kernel.bin: build/multiboot_header.o build/boot.o linker.ld

ld -n -o build/kernel.bin -T linker.ld build/multiboot_header.o build/boot.o

We add build in no fewer than six places. Whew! At least it’s

straightforward.

build/os.iso: build/kernel.bin grub.cfg

mkdir -p build/isofiles/boot/grub

cp grub.cfg build/isofiles/boot/grub